The Epoch…

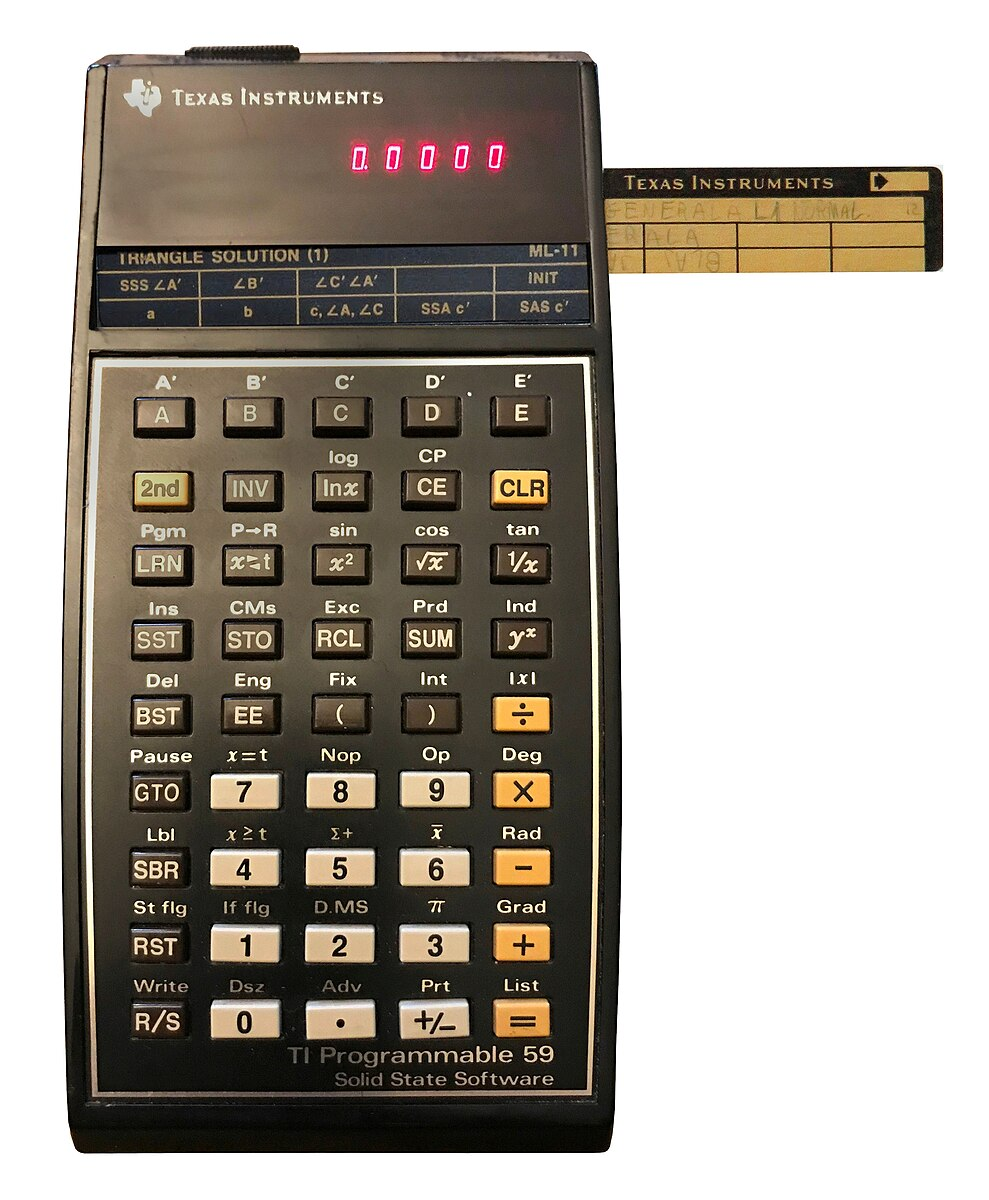

Starting with a TI 59, I made his first steps on “computers” and programming the hard way…

Yes, one single line single, colour display, 1 KB of mass storage, and very limited CPU power. Anyway, imagination is the limit, and if it’s programmable, let’s program it !

After trying the ZX81 (with the 16 KB or RAM extension and the thermal printer !),

This “computer” enjoyed a fast mode: as the only CPU was busy rendering the B/W screen output and so was only available for running the single program between frames, it was possible to boost its speed dramatically by… switching the display to “rendering off” while the program got the CPU !

Next, I had the possibility to join my first programming lessons, on Apple-II:

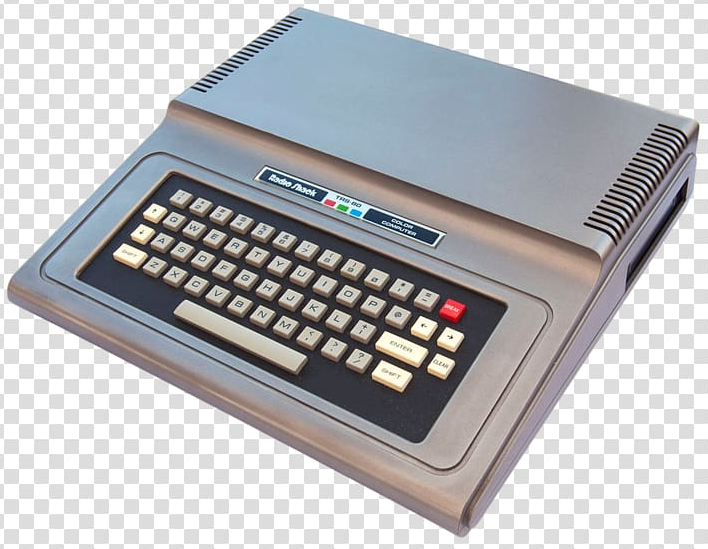

As it’s amazing and promising, my family takes a load to purchase a “serious” one: the TRS-80 color, from Tandy, and its incredible 16 KB of RAM !

This device was using a plain audio tape recorder as mass storage, the TV set as display (through a RF coax output), and was provided with the full electronic schema and examples of the signals as they should appear on an oscilloscope screen, in case of need…

Fitted with a Motorola 6809E clocked at the incredible speed of 0.8 MHz (yes, 800 kHz), the best way to program it was by Basic programming language or direct binary coding (I mean… direct hexadecimal pokes in the RAM, as no assembler was provided…). It was the first possibility for me to actually understand, manage, program, … a computer, at hardware level !

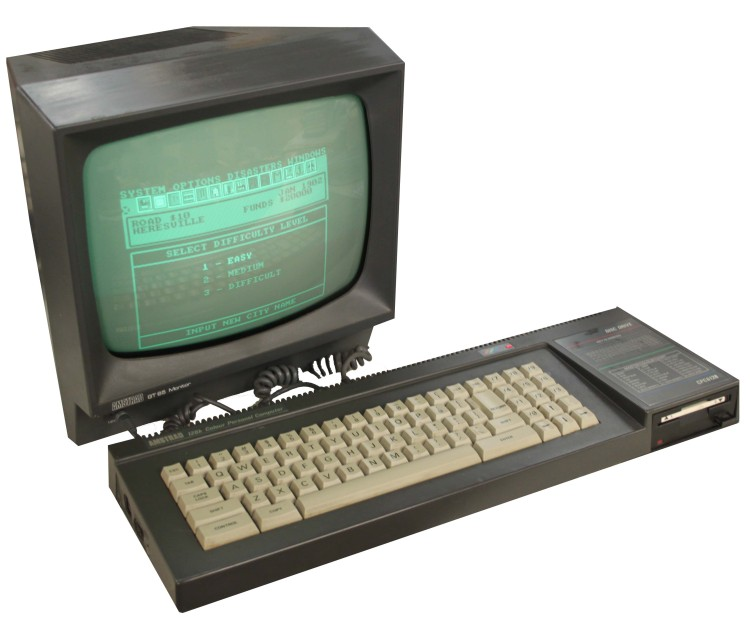

Then, I moved to a “serious” one, fitted with an operating system (CPM): the Amstrad-Schneider CPC6128.

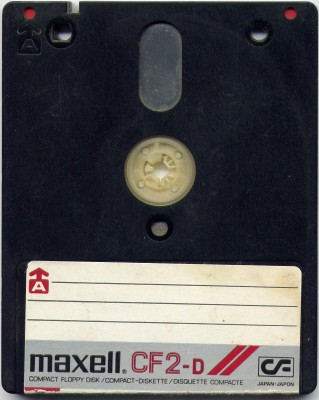

With the incredible size of 128 KB of RAM (yes, 0.128 MB), not fully accessible at once (the “high” part was only swap-able pages of RAM, as the CPU address space was limited to 64 KB), it was provided with C and Pascal compilers. The included floppy disk was using special 3″1/4 floppies, so 180 KB of mass storage at a time !

At that time, there were no public Internet, no modems, so the way to get software was by… postal exchanges.

After years of reliable service, I had the opportunity to purchase my first 10 MB hard drive, a full-sized heavy load of metal, designed for the emerging IBM PC-XT.

The real advantage of this one is that you physically heard its seek time, as it was larger than 100ms ! Don’t drop it on your foot as it’s not the hard drive that will break…

The missing part was then… the surrounding PC-XT, that came not long after, clocked at the incredible speed of 4.77 MHz.

Running on DOS (PC-Dos from IBM, or DR-Dos, from Digital Research, or MS-Dos, later on), it was fitted with 256 KB of RAM. With Norton Commander (what else), everything was possible !

The first valuable software were then provided by mailings, as example Walnut Creek CDROM, providing 32-bits C compiler from the GNU project !

At that time, software was freely available to everybody, mostly in source-code format, costing only a timestamp and the (noticeable) price of the floppies.

The floppies were so expensive that there were devices dedicated to punch a symmetric hole in it, so that “single side floppy” could become (with some luck) a double-sided one !

With time practice, a standard puncher or even a nail scissors could do the trick !

A first eye-opening experience: not only all the developed software I made for my Texas Instrument was lost, as not written in a portable language, but also all the software I wrote for my TRS-80, as the “basic” programming language was not compatible with the one provided with the IBM PC-XT, and also because the storage media was not supported. Despite some attempts to make communication between them through serial links (RS232, at up to 38.400 bauds), there was no way to “save” my work, that represented already tens of thousands of hours at that time.

This experience awake me early about the need to stick to portable standards, not only in programming language, but also in environment, protocols, storage media, connectors, …

From there, I started to stick to standard, portable, sustainable, long-lasting choices. In particular, my interactive matrix calculator, written in plain standard Pascal language, was usable “as-is” on the DOS-based IBM-PC, as it is still today, on almost any computer.

Programming in hardware-specific language, or based on vendor-specific libraries, or stored on proprietary media is as building on sand: it will work for a time, but is lost by design in the long term, and that is a pity. Don’t do this mistake !

The Windows delusions…

In the 80’s, I assisted to the first demo of a very new software, running on DOS and so IBM-PC, in Brussels. The gay was working for a little startup from the USA called “MicroSoft” or something like that.

The software demonstrated was called “Windows 3.0”, as there has been already two previous versions distributed in the US (but obviously not in Europe). It was a GUI-based software launcher, a kind of replacement of the DOS prompt.

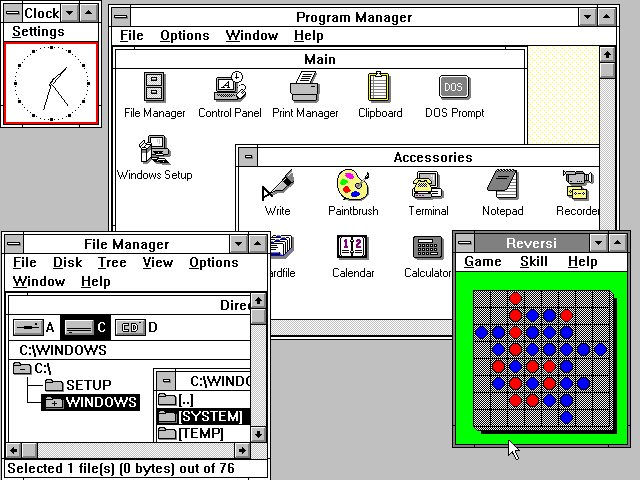

Single-task (no preemptive multitasking), not managing the RAM an another way than the underlying DOS, it was just giving a more or less common way to access the drives (hard drive and floppy), configure the graphical card (Hercules, CGA at best) and printers (for the software that were using it through the Windows API).

As it was not actually managing the computer ressources, it could not be really called an “operating system“. It was more a software launcher for DOS, providing a graphical user interface layer.

Note: DOS has never be an Operating System. As its name says it: it was a Disk Operating System, a software designed to manage disks, not computers !

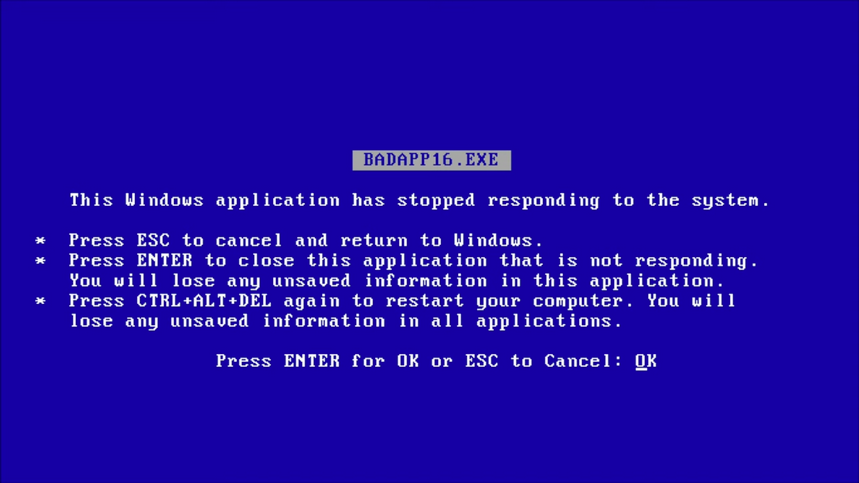

As Windows mimicked more or less multitasking (a “collaborative way“), any application that failed to return the control to the main Windows process lead to a general computer freeze, causing the need to physically reset the whole computer. That’s why PC “enjoyed” for so long a physical reset button, that some called the “continue button”…

Sometimes, as there was no memory management (memory protection, virtual memory, …), the whole system crashed spontaneously and mysteriously, leading to the lost of all unsaved work in progress.

But as the situation was not really better on CPM-based computers, or on DOS-running PCs, users accepted it. Note that, at that time, proper working computers (running Unices) were already available, with a GUI, a mouse, and with virtual memory management. But the hardware price was so high that it was reserved for professional users, universities, scientists, and so on.

This situation (total system unreliability, unstable computers, dramatically unsafe software design) lasted up to Windows Millenium.

However, as everything that is new, it deserved the pain to try it, so I used it, aside of stable, properly working computers, as found at the university (and for sure running a real OS, various flavours of Unix).

The actual reason IBM-PC has been a success, despite this very broken software layer (DOS + Windows) is that IBM decided to free and publish the IBM-PC architecture specifications, leading to a strong widening of the suppliers, especially from Asia. It was the age of “IBM PC-compatible” white boxes, available at affordable price. Typically, those more or less self-assembled “clones” were available at the third of the cost of a genuine IBM-PC, so the deal was straight.

That is also why the “PC” replaced the widely used computers at that time, including the “Commodore 64″…

This shows us that something that is standardized, compatible, … is produced by multiple vendors, leading to price competition and reliable, wide availability, so is a good choice for the consumers, despite of design flaws. The choice of Intel as CPU provider was not the worst of the technical errors made by IBM on that one…

During my seven years study at the UCL (Université Catholique de Louvain), leading to two masters (one in electronics, one in computer science), I fought strongly to have not only access to proper computers (Unix-based), but also the the root prompt, in order to learn how to administer computer systems.

At the very end of my study, around 1992 or 93, I had the opportunity to meet a small OS project, written by an European student, and published in source-code format on the emerging Internet: Linus Torvald. This system was unable to access hard-drives (only booting and dealing with floppies), and was only working with US-layout keyboard, but it had the advantage to run on a standard PC, which was not the case of professional Unices at that time.

As I wished to run interesting software, aside of Windows-based applications, I was running the WalnutCreek CDrom distributed development environment, running directly on DOS, as Windows was running only in 16-bits mode, and proper programs required 32-bits environments. I worked hard, aside of my studies, to earn enough money to purchase a Unix licence for PC, a SCO-Unix (that I finally never installed…). It was the time Internet was accessible for computer students only (at the university). Aside of that, the only alternative solution were BBS networks such as FidoNet.

I installed and managed one of the nodes of this BBS network, based on a 38.400 bauds (V42/v42bis) modem, and a single phone line…

I also struggled with the university authorities to gain a dial-up access to the students to the Internet, but the rules, at that time, didn’t allowed such free, remote access, unfortunately.

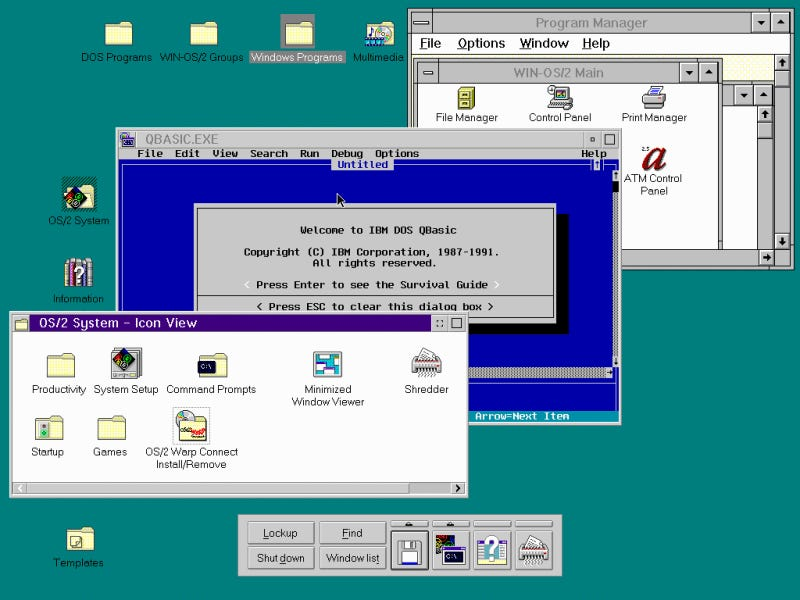

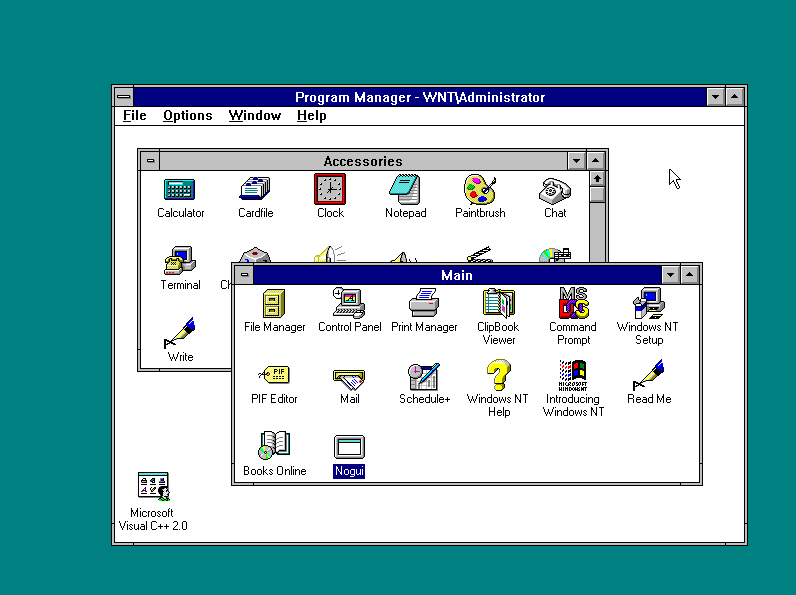

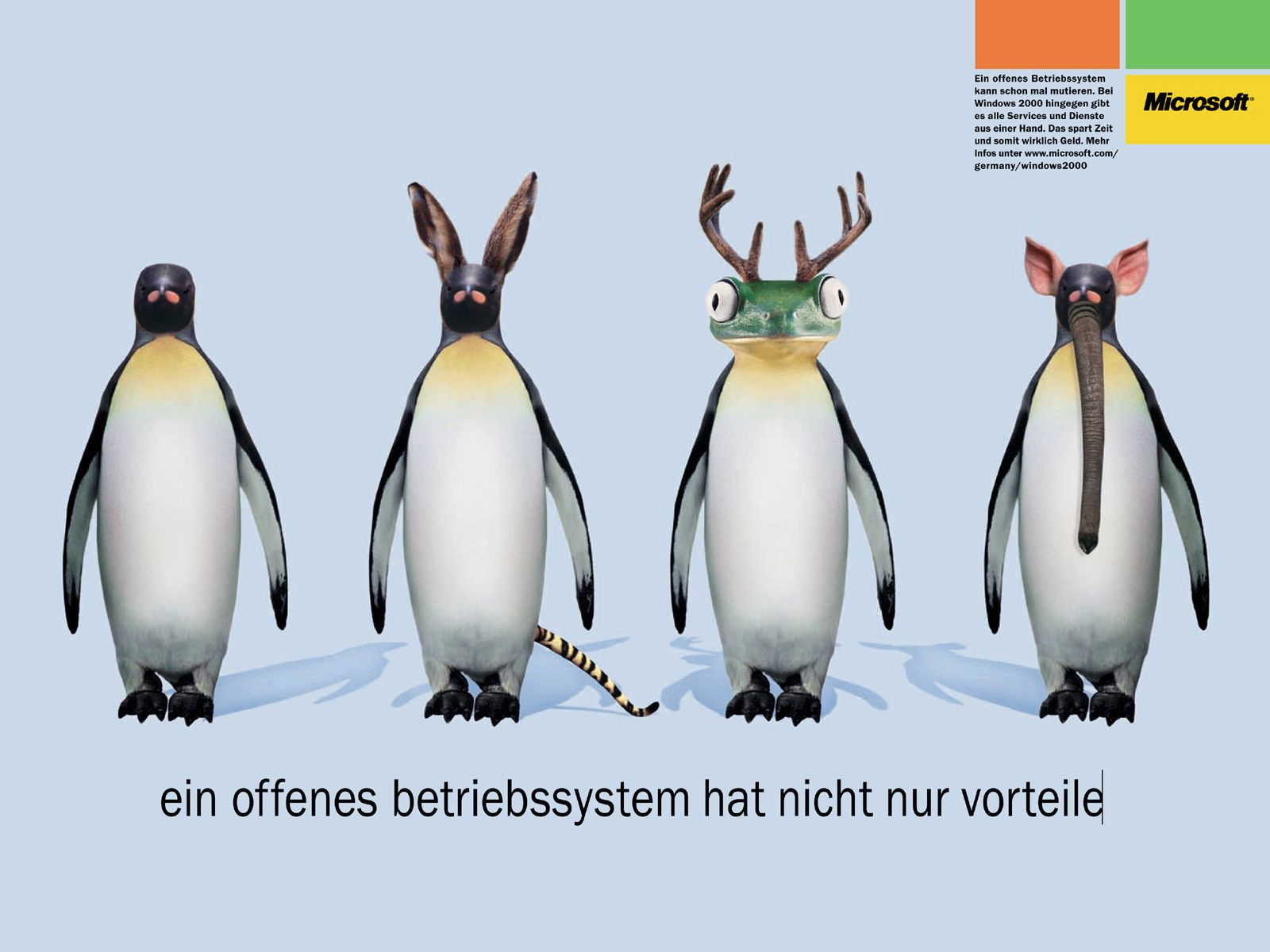

As we were looking for a proper, really multi-tasking operating system, able to manage multiple BBS software in parallel and real-time, and as the common project of Microsoft and IBM slit appart, leading to the first versions of “Windows NT” at a side, and OS/2 at the other, I evaluated the two alternatives.

OS/2 was a very stable OS, running in “protected memory mode”, offering plain real multi-tasking, and was able to survive a system crash, actually a power outage, as the system itself was stable. If brutally power-cycled, it reloaded the user environment, including the open applications, and most of their content. It was also modular: it was possible to run plain DOS-boxes emulations, and DOS-based software inside (our BBS service software). It was even possible to run it without the GUI (that is definitely not useful, especially for a server…)

Aside of that, I worked on the evaluation of Windows 2.0, for Microsoft (I have a Microsoft badge with my name printed on it), especially regarding the file-system access rights managements. This product, as far as I know, has never been released for commercial purpose.

The Windows to Win-NT transition

What must be known is that Microsoft never managed to write a proper OS.

Their first DOS was actually purchased from Seattle Computer Products, then tweaked more or less successfully, but anyway, DOS has never be an Operating System, as explained above.

For Windows, until Windows-NT, it is a shame: a system that does not manage the computer hardware, is opened to viruses (a student could write one as exercice…), a software that Microsoft promised to bring to stability but actually never did (a shame, as they released their first version in 1983 – Windows 1.0 – and the last version was Windows Millenium). Being unable to provide a decent software after nearly 20 years of developments and the amount of cash extracted abusively from their victims (the consumers) is a total shame, that can only be explained by their business model:

Sell new releases,

with the promise that

“it should enhance user’s experience”

Under such a logic, the provision of a working stable version is just killing the business model…

But at a given point in time, Microsoft became to have the ambition to write their first genuine operating system, and as they knew it was not in their capacity, they asked the help of a professional: IBM. This lead to the development of a new OS, which ambition was huge:

- Be able to manage the hardware (do the actual “Operating System job”)

- Be able to launch Windows-API based applications

- Be able to launch Unix-API (Posix) based applications

- Be able to launch DOS-“API” based applications

- On the same screen

- And give them the possibility to share data (through cut-and-pastes, …)

I saw a demo. Once. An alpha or beta version. But just once, and I never saw that anywhere else since. And I don’t find anything about that on the web neither. Maybe did I dream ? But I remember precisely where I saw that demo, so…

The point is that Microsoft broke its agreement with IBM before the finished common OS was released, leading to the two branches: OS/2 at one side (by IBM), and Windows-NT (which is very different from the genuine Windows branch – Win 3.0, 3.1, 3.11, WfW, 95, 98 and Millenum). It produced Windows-NT 3.0, Windows NT 3.5, WIndows NT 4.0 and Windows NT Workstation, one of the most stable and reliable operating system ever published by Microsoft (yes, you understand why: it was made by IBM…).

All the more recent versions of Windows (Windows XP, Windows 2000, Windows 2003, Windows Vista, Windows 8, 10, 11, …) are just updates of that work, achieved by IBM.

Windows NT 4.0 was “as stable as a Microsoft product could be”.

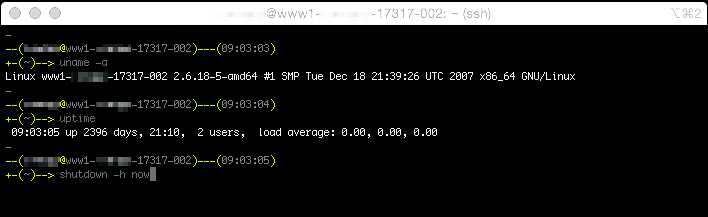

Years after it wasn’t anymore supported, it was revealed that it crashed systematically after 49.7 days of uptime, as an internal real-time counter was overflowing (amount of milliseconds went greater then 2³²…).

The reason why this “undocumented feature” was not noticed by most users is that it was highly unlikely that a computer running Windows-NT, even if it was supposed to be the most stable Windows version available could run so long without crashing. The usual “good practice” was to reboot the server, at least once a week, generally during the week-end…

For comparison, the Voyager-1 OS is running without reboots since its launch, in 1977,

and the amount of computers above 1000 days of uptime is huge, as long as it is running a proper OS (such as Linux, BSD, …).

How is it possible to put under control of a computer running a so unreliable operating system, a hospital, an airport, a ship, a nuclear power plant, a military device ? Obviously, Microsoft notice this “detail”, and wrote, black on white, on its licenses, that the OS was not fitted for air-planes, nuclear power plans and so on. Its better to say, but I’m pretty sure some tried anyway…

There is a way to make computer system more reliable. Put them in a cluster.

The US navy tried it, and fitted the now famous “Sea Shadow” stealth frigates with a Windows NT 3.51 cluster.

After less than 40 miles in the sea, the both nodes of the cluster had crashed, leading to the need of a shammy towing back to the harbour.

More than that, all the bad practices known in by the computer scientists have been made in Windows-NT: not case-sensitive file names (leading to security flaws and low performances), admissibility of self-modifying code (to keep compatibility with games that were running on the previous flavour of Windows, or plain DOS).

This “choice” opened the Windows-NT family also to compatibility with viruses. That is why there are viruses on Windows and Windows-NT families of systems, but not on MacOS, BSD, Solaris, SunOS, Linux, Android, neither OS/2, as example…

So Microsoft took the technical decision to open their OS to viruses, just for marketing reasons: favorise the adoption of their new OS thanks the compatibility with existing gaming softwares… Do you believe it ?

In this condition, why didn’t OS/2 took the lead ?

For the very same reason the IBM-PC won the lead, but the opposite way.

The IBM-PC was not really a silver bullet, but its specs have been released to the world, leading to a wide adoption, multiple compatible hardware manufacturers and so general availability and affordable prices.

IBM did the very opposite with OS/2: it bound it to its own hardware only. The installation media were fitted only with drivers for IBM-genuine hardware. At the same time, IBM decided to launch a new hardware version of its leading product: the IBM PS/2, with proprietary busses, connectors, internal architecture, …

IBM OS/2 was made for IBM PS/2, and IBM PS/2 was designed for IBM OS/2.

As the economic advantage of the standard, “compatible” IBM PC was lost, almost nobody (just large companies, bound to IBM by tradition) did the jump. For the few that wanted to run OS/2 on a non PS/2 computer, the battle was strong (I did it !) so almost nobody switched to OS/2 for their home computer.

OS/2 was used, however, in lots of applications that requires high security and availability level (reliability) such as ATMs:

bank desktops, in flight entertainment, railway station or airport signage, …

For sure, it is difficult to find a picture of a crashed OS/2 computer, as it was much stabler than Windows, even with much recent versions…

Obviously, in the I.T. sector, it’s not always the best than prevails…

Just a though to Sun Microsystems, BSD, Next, Netscape, Compaq, Digital, … and so many more.

The remaining are not the best, but the ones that managed to collect enough cash from their sales to survive their mistakes. And also, sadly, the ones that broke the very principle of concurrency and open market, most of the time with the complicity of the authorities that should prevent this to occur…

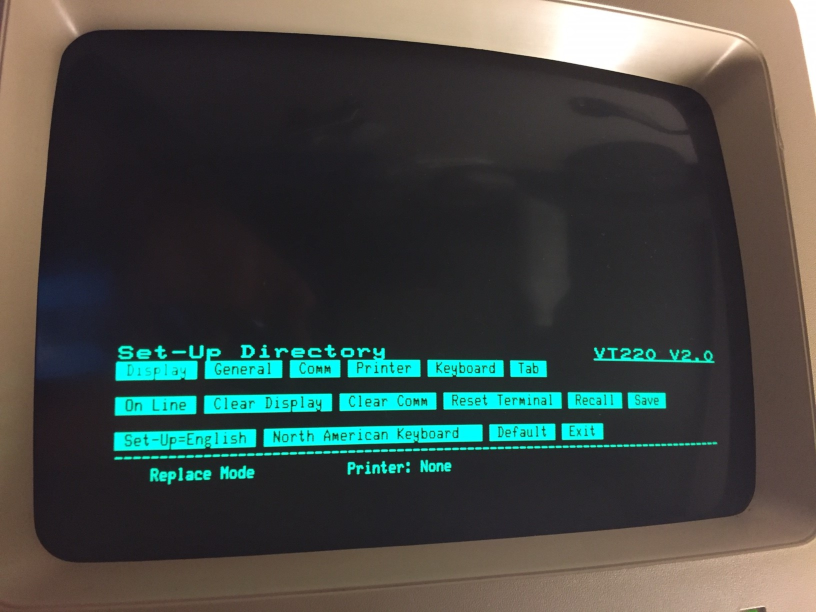

So OS/2 was a dead-end, as decided by IBM (they shot the bullet themself in their foot…), I lived with Windows-NT, and even insisted (and obtained) the trial of Windows-NT 4.0 (beta version) in my employer office, aside of the serial terminals (VT-220, RS232 based).

The progress was huge, despite the system crashes, at least because it was then possible for the developers to open, aside of each other, several Telnet sessions to the server, as example one with a text editor, one with a compiler command line, one with the output of a tested program. A huge progress…

Why not Linux for that ? Because it was not yet mature enough. But already available from some interesting tasks. Such as managing multiple modems at a time (it was really multitasking from the beginning), or sharing ressources through networks (using the NFS standard, as example).

For AT&T, in UK, I noticed that they were lacking the power of standard tools, such as “make“, the well known utility. So I installed it, and trained my colleagues to use it, in order to avoid the recurrent regressions cases, when re-building a new release based on a bunch of modified files.

But this initiative has not been appreciated by the hierarchy, as the “make” software was not an approved software by the I.T. department !

I informed them that “make” was part of Unix, and was created by the Bell Labs, a corporation founded and owned by AT&T in 1925, so that basically, “make” was their software !

This reached me how stupid the management can be. I met this kind of situation several times since then: tools that should obviously be used, but forbidden, because “not approved” by people that do not understand anything about computers… A frequent variant of that is the case of software that are banned of use because they have not been paid. Some managers do still think that software license are prices, despite it is totally false: software license are just legal, binding contracts, specifying the conditions of use, and sometimes a usage fee, but not mandatorily, and that even a software license is optional !

Later, I met an another kind of toxic human behaviour: some colleagues, freelancers, were producing cryptic code on purpose, or were writing misleading comments. This made them essentials. I even proved that some were deleting some other’s code, in order to force their customer (the employer company) to re-sign contract extension at their highest rates. I informed the management, and they told me: “we know, but what can we do ?“.

Ok, so obviously companies like to become hostages from their own workers, as they like to become hostage from proprietary softwares, undocumented code, unmaintainable systems, cryptic data storage format and media, incompatible hardware (connectors, protocols), and so on.

This is totally stupid, counter-productive, against their own interest, but it is how it is, and that is why some businesses remains nowadays in the hands of misbehaving, counter-productive, toxic software vendors, such as Oracle, Microsoft or Apple, obviously.

It was told me also that such “free software” was not allowed in AT&T network, which looked strange to me, as we were developing on Emacs, and that Emacs, as far as I know, is the paradigm of “Free Software”. I then understood that those managers, working in the international branch of a leading I.T. company, in the software development business, didn’t understood the difference between a Free Software and a Freeware, despite it is almost the very opposite of each other…

Not only they didn’t understood those basic and very important concepts, not only they though that a software license is a price, but they all used WinZip and other Freeware daily, without worrying about the fact this piece of software was running on their desktop, accessing their most valuable data, without knowing anything about its author(s) and potential hidden features, as the source-code was unavailable !

So “make” is a problem, but WinZip no.

With time, Linux became more and more mature, fitted for professional use, and I never lost contact with it, so whenever applicable, I proposed Free Software and/or Linux-based solutions to my employers.

FreeSoftware/Linux rocks

As example, at Astra-Net (the Internet by satellite spin-off of SES-Astra), we needed an FTP server. It was decided that it would run on Windows NT 4.0. It was installed by a Windows specialist, and put in production. The problem is that it crashed every single week… And it was required for the upload of our customer’s content, intended to be broadcast by satellite.

The problem was “out of memory” error, so a memory leaking. A problem that can not be solved a reliable way if the source-code is not available… The I.T. guy in charge tried all the tricks found on the web, but without success.

Yes, if you use a proprietary software, despite you can have learned several years in the best universities, you can’t fix it. You can’t even discover a reliable way what is actually happening, as the behaviour of the software is totally hidden from you: it is compiled, and without its source-code, you basically can’t do anything. So the best you can do it search the Internet, and wish somebody found a solution to your problem.

This is not satisfying. This is not professional. This is not the way a technician should work. This is good for cookers, not for engineers.

As the server still crashed repeatedly, the management decided to send this guy to the USA, to follow a very expensive training provided by Microsoft in Redmond, around the proper management of a Windows NT 4.0 server.

He took the plane, travelled two weeks, enjoyed his trip and costed a lot to SES-Astra. Once back, he tried to apply the advised fix: purchase and install a memory upgrade. So the system, fitted with 64 MB of RAM, jumped to 128 MB.

The situation changed: instead of crashing every week, the server crashed every two weeks now. Well done !

As the management became more and more nervous, and that the customer complained about failing uploads and unavailable service, I decided to apply a fix: fetch two old, retired computers, fit them with a Linux distribution, with redundant hard drives (software raid provided by the Linux kernel), configure the SCSI card such a way that it may boot from the second drive if the first fails, and install a FTP server on it.

One year later, the manager came to our office, and told us that, finally, this training at Redmond was a success, as the FTP server was now up and running without any outage since a fully year !

We had to tell him the truth…

He objected that the Astra-Net spin-off was already using HP-UX, SunOS, Windows 2000, Cisco-IOS, Windows NT, Windows XP, … and so that if Linux was added to the requested competences, it would become impossible to hire any new collaborator.

Ok, I got it. But…

- The HP-UX was there just to monitor the network, using SNMP standard, that a Linux box could do also.

- The SunOS station was running a firewall, that was able to run on Linux as well.

- I was working full-day on a Linux workstation (installed without approval of the company), running the tools we needed (mainly Lotus Notes) on WineHQ,

- Belgacom was using Cisco routers with modified Linux distribution, as Linux was able to provide services that Cisco software was unable to do, on their own hardware…

- POST Luxembourg was providing also Linux-based routers and firewalls, on Cobalt Cube hardware, to professional users in Luxembourg, so obviously, Linux was fitted to run a firewall, a router and other network services

- And the Windows NT, Windows 2000 or Windows XP stations were used mainly by our customers, so we didn’t needed to manage it, as we didn’t managed their IOS or other OS based stations.

There was indeed a problem of “universal competence requirement” in SES-Astra, as we also needed some competences in the satellite and networking domain, including in the multicast technologies, the DVB world and so on. But if simplification was a target, Linux was obviously the solution…

Later, SES-Astra participated strongly in the development of the IPv6 stack of the Linux kernel and other features (such as the IPv4 extensions for the satellite world). And once again, the high management didn’t understood what was going on:

One week after the IPv6 was finished, the new public released Linux kernel contained the feature, so the management told “we spend one and a half year to develop this, and we would just have to wait one more week to get it from the standard kernel !“.

They just don’t understood what free software is about… Community work, sharing the effort, sharing the result. There was no coincidence: the IPv6 implementation world-widely available in the Linux kernel was, obviously, their contribution to the Linux kernel project, that they enjoyed freely since years !

I then left SES-Astra but still worked in the satellite domain, for some more years, collaborating with Alcatel Space, ESA, CNES and other famous names, always on Free Software and Linux only. During my trips between Luxembourg and Paris, I noticed that my very same laptop, when running on Linux, was able to keep the load during the whole trip (4 hours at that time, before the TGV…)

but the same work, when done on Windows 2000, lead to only 1 and a half hour of autonomy. Actually, it was noticeable: when running the Windows partition in a virtual computer, the virtualiser reported a permanent 100% virtual CPU usage, as Windows was not managing power a proper way, polling instead of running interrupt-driven events, and so on.

I don’t know if it’s still the case, but Linux was already able to manage properly the ressources, including the power (the battery) at those old times, and it is more and more important as energy becomes always a more sensible subject.

I then joined a more local service company. Our main purpose was help-desk, but the company infrastructure and internal project were based on Free Software and Linux only. I put in place some remote assistance tools, as I did for Abil’I.T, developed some signage systems, worked on the first digital cinema projection equipment.

At one side, the main studios, in the USA, were developing such devices based on Linux, at the other side, some startups based in Europe (Liège, Belgium) were fighting to make it running on Windows XP, a system that is monolithic and absolutely not fitted for such usage…

Once again, Europe was forcing the use of a foreign, unfitted proprietary operating system and software ecosystem, while the state-of-the-art companies had already adopted the best tool in town, Linux, that is (I recall you) actually a European product !

For sure, as usually, the US product prevailed. Not because they are better, just because they are not as stupid as we are…

I also managed the Oracle-based RDBMS of the European Court of Auditors, in Luxembourg, a Sun Solaris cluster-based system.

I managed the CMCM main server, a RedHat Linux running hardware, with Oracle RDBMS and some other side tools. It was running on a single thread of a single CPU, despite the hardware was a quad-CPU system, just because the pan-European company that sold and installed it was unable to properly install a SMP Linux kernel…

All that experience demonstrated me a very obvious way that FreeSoftware is totally sufficient for running

- an industry,

- an office,

- an administration,

- a state,

- a country

- …

and that it is, actually, far away the best solution for all purpose.

Not only at cost level.

Not only for strategic / sovereignty / security reasons

Not only because it is more efficient, safer, performant

But also because the software development model defined by the FreeSoftware philosophy brings better value to the people, for much less efforts, and produces sustainable software, as the coding is usually made on standard (so long-lasting) language, on freely available libraries, and able to run on various platform architectures, using various CPUs coming from different producers.

Free Software business model is the one that fits the customer needs the best.

And that is why I launched Abil’I.T, a company based on human values and ethics, not on large profits expectations.

FreeSoftware, not Linux

What Abil’I.T. is about, it’s bringing all the power of Free Softwares (also OpenSource ones) to the business world. Either administrations, industries, offices, …

Linux is there. It is powerful. It is now nearly a monopoly, as more than 90% of the CPUs worldwide are running a Linux kernel.

Is Linux perfect ? No.

A more concurrent world would be better, as competition promotes innovation. Also, the Linux kernel suffers from design flaws, such as its monolithic nature. A “micro-kernel” architecture would be a better choice, for most of its applications.

Also, the “one size fits all” is never fully true. There may be some more suitable solutions for specific applications, such as IoT, watches, real-time controllers, …

As the software that compose a Linux distribution comes from various teams, various locations, they are not as coordinated as the softwares coming from a single source, a single software ecosystem, such as if coming from Apple, Microsoft, IBM or Oracle. The kernel, the base libraries (glibc, …), the graphical interface, … all that is freely provided by different sources, teams. It means there may be discrepancies, alternative ways, options, tuning required.

A Linux software distribution is much richer than a proprietary, closed software ecosystem. Therefore, it enjoys much more choices. Choosing may be seen as a drawback, for users that have been used to environment were almost no choice were left to them, but it is basically a chance, an opportunity, for users that are used to enjoy freedom of choosing.

But Linux is there and it’s available under GPL, so it’s a Free Software. So let’s use it, as support of all the other Free Softwares. The most professional way. For the best of all: citizens, businesses, states, countries.

The OS war is over.

Don’t forget Microsoft tried to sue Linux as “Anti-american”:

McBride even called open source software « anti- American and against the Constitution. ». But they lost.

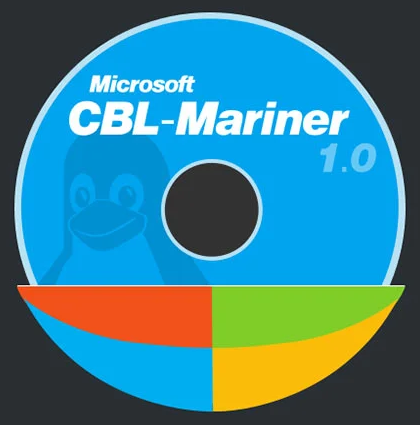

They tried to pretend that choice was bad to have, that Linux had too many variants, and now, they published finally their own Linux distribution…

Don’t forget Steve Ballmer once said “Linux is the cancer of software”

In 2012, the market, their customers, forced them to open Microsoft cloud, Azure to Linux.

And today, Azure, the Microsoft-owned and operated cloud, is running far more Linux-based nodes than any other OS.

Even Microsoft is using Linux instead of Windows internally…

In 2023, Microsoft published its first Linux distribution.

Steve Ballmer, the very best that told “Linux is a Cancer” now declares:

« I may have called Linux a cancer but now I love it. »

Former Microsoft chief Steve Ballmer once considered « Linux users a bunch of communist thieves and saw open source itself as a cancer on Microsoft’s intellectual property »

He loves it, doesn’t he looks like ?

Even Cisco has been sued for using Free Software without respecting the very small constraints that comes with its license terms…

China started to get rid of Windows computers a long time ago…

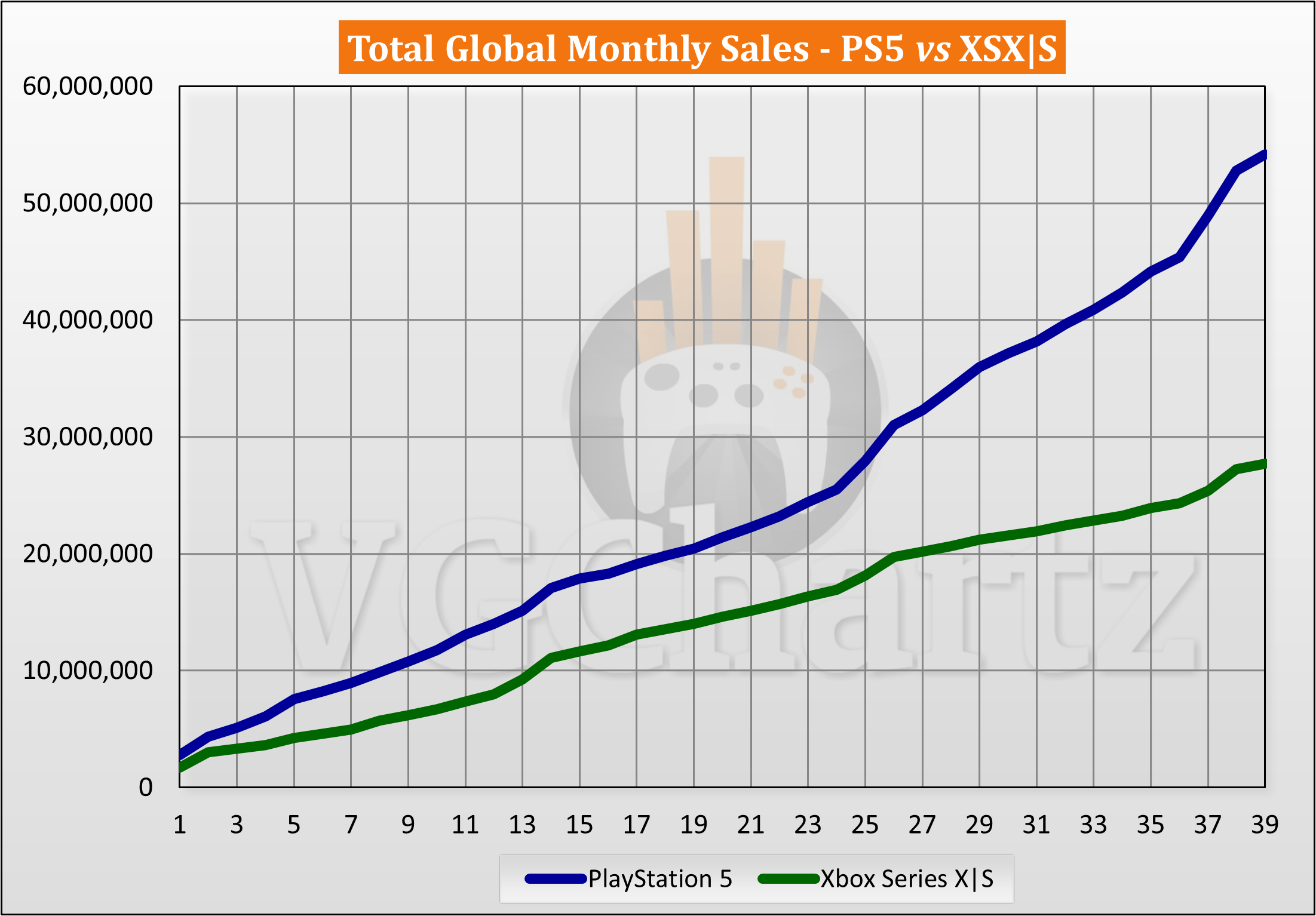

The Sony PlayStation runs on Linux and beats the XBox platform.

The Android phone market rocks, while Android is nothing but an another Linux distribution, and Windows phone is dead (and even forgotten).

The IoT world is not running on Windows. Raspberry is everywhere. Automation devices have embraced Linux years ago. Same for connected TVset (TizenOS, WebOS, Android, …), satellites, rovers, cars, …

The ISS runs on Linux. NASA runs on Linux. CERN runs on Linux and Free Software. Even the US army is more and more widely using Free Software.

Some countries already decided to make the switch, officially.

Others are doing it, without communicating on it.

We told it. It happened.

The OS war is over. Years ago.

If even Microsoft uses Linux, why don’t you use it ?

If a Volvo car dealer comes to work driving an Audi, would you buy him a car ?

How to make the jump ?

You don’t know how to ?

Your software vendor does not sell it ? (It’s free… maybe that is why…)

Your service provider doesn’t know it ?

Come to us.

Contact us.

We are the specialists, since 15+ years.